Machine Learning (ML) Linear Regression Exercises

Machine Learning (ML) Linear Regression Practice Questions

In the standard linear regression equation y = β0 + β1x, what does the term y represent?

Here is the breakdown of the equation variables:

- Dependent Variable: The variable y is the outcome we are trying to estimate or predict based on the input.

- Option 1: This describes x, the independent variable or feature.

- Option 3: This describes β1, the coefficient that determines the slope.

- Option 4: This describes β0, the intercept of the regression line.

Quick Recap of Machine Learning (ML) Linear Regression Concepts

If you are not clear on the concepts of Linear Regression, you can quickly review them here before practicing the exercises. This recap highlights the essential points and logic to help you solve problems confidently.

Foundations of Linear Regression in Machine Learning

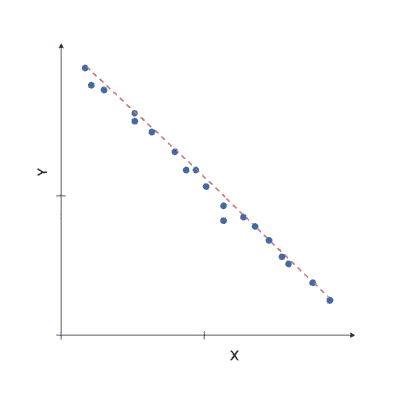

Linear Regression is a supervised learning algorithm used to model the relationship between input variables (features) and a continuous output variable (target). It does this by fitting a straight line or flat surface through the data.

The goal is simple: use known data to learn a mathematical relationship, then use it to make predictions.

- Inputs → features (X)

- Output → target variable (y)

- Model → learns how X affects y

The word linear means the model assumes the output changes in a linear way with respect to the inputs. With one feature, this is a straight line. With many features, it becomes a flat plane or hyperplane.

Linear Regression is widely used because it is:

- Fast to train

- Easy to interpret

- Mathematically well understood

- Very effective when relationships are close to linear

Even in modern AI systems, Linear Regression is often used as a baseline model and as a building block for more advanced algorithms.

Mathematical Model of Linear Regression

Linear Regression represents the relationship between inputs and output using a mathematical equation. This equation defines how each feature contributes to the final prediction.

General form of Linear Regression:

y = β₀ + β₁x₁ + β₂x₂ + ... + βₙxₙ

| Symbol | Meaning |

|---|---|

| y | Predicted output (target variable) |

| x₁, x₂, …, xₙ | Input features |

| β₀ | Intercept (bias term) |

| β₁, β₂, …, βₙ | Coefficients (weights of features) |

The intercept β₀ represents the value of y when all input features are zero. Each coefficient βᵢ shows how much the output changes when the corresponding feature xᵢ increases by one unit.

There are two main forms of Linear Regression:

- Simple Linear Regression — uses one input feature

- Multiple Linear Regression — uses multiple input features

The learning process of Linear Regression is all about finding the best values for these coefficients so that the predicted values are as close as possible to the actual data.

Data Representation and Feature Space in Linear Regression

Before Linear Regression can learn, the data must be represented in a mathematical form that the model can work with. This is done using vectors and matrices.

Each data point is written as a feature vector:

X = [x₁, x₂, x₃, …, xₙ]

All feature vectors together form a feature matrix:

X =

[ x₁₁ x₁₂ x₁₃ … x₁ₙ

x₂₁ x₂₂ x₂₃ … x₂ₙ

…

xₘ₁ xₘ₂ xₘ₃ … xₘₙ ]

The target values are stored in a vector:

y = [y₁, y₂, y₃, …, yₘ]

Each row of X represents one data sample, and each column represents a feature. Together they describe the feature space, which is the geometric space where the regression line or plane is fitted.

Feature scaling and normalization are often applied to ensure that features with large numerical values do not dominate the learning process.

Cost Function and Error Measurement in Linear Regression

Linear Regression learns by minimizing the difference between predicted values and actual values. This difference is called the residual:

Residual = yi − ŷi

Here, yi is the true value and ŷi is the predicted value for the i-th sample.

To measure how well the model fits the data, we use a cost function. The most common one is Mean Squared Error (MSE):

MSE = (1/n) Σ (yi − ŷi)²

- Squares the residuals to penalize large errors more heavily

- Takes the average over all data points

- Provides a smooth function that is easy to optimize

Another common metric is Root Mean Squared Error (RMSE):

RMSE = √MSE

Minimizing the cost function allows the model to find the best coefficients that produce predictions closest to the true target values. This process is the core of the learning phase in Linear Regression.

Gradient Descent Optimization in Linear Regression

Gradient Descent is the most common method to find the optimal coefficients in Linear Regression. The goal is to minimize the cost function (MSE) by iteratively updating the coefficients.

Each coefficient (β) is updated in the direction that reduces the error. The update rule is:

θ = θ − α ∇J(θ)

- θ represents the coefficient vector [β₀, β₁, …, βₙ]

- α is the learning rate — controls step size

- ∇J(θ) is the gradient of the cost function with respect to θ

The gradient points in the direction of the steepest increase of the cost function. Subtracting it moves the coefficients toward the minimum.

Key points to remember:

- Learning rate too small → slow convergence

- Learning rate too large → overshooting or divergence

- Gradient Descent continues until convergence or a maximum number of iterations

- Variants like Stochastic Gradient Descent (SGD) or Mini-Batch Gradient Descent can speed up learning on large datasets

Gradient Descent provides an intuitive and general approach to optimization and forms the foundation for many machine learning algorithms beyond Linear Regression.

Closed-Form Solution (Normal Equation) in Linear Regression

Besides Gradient Descent, Linear Regression can also find optimal coefficients using a closed-form solution, called the Normal Equation. This method calculates the exact values that minimize the cost function without iteration.

The formula is:

θ = (Xᵀ X)⁻¹ Xᵀ y

- X is the feature matrix (with a column of 1s for the intercept)

- y is the target vector

- θ is the vector of coefficients [β₀, β₁, …, βₙ]

- (Xᵀ X)⁻¹ Xᵀ y directly gives the coefficient values that minimize MSE

Advantages of the Normal Equation:

- No need to choose a learning rate

- Exact solution (no approximation)

- Good for small datasets

Limitations:

- Computationally expensive for large datasets (matrix inversion is O(n³))

- Not suitable when there are many features (high dimensionality)

- Gradient Descent is preferred for very large datasets

In practice, the choice between Gradient Descent and Normal Equation depends on dataset size and computational resources.

Model Assumptions in Linear Regression

For Linear Regression to provide reliable predictions, several assumptions about the data must hold. Violating these assumptions can reduce model accuracy and interpretability.

- Linearity: The relationship between features and target is linear.

- Independence: Observations are independent of each other.

- Homoscedasticity: Residuals have constant variance across all levels of features.

- No multicollinearity: Features are not highly correlated with each other.

- Normality of errors: Residuals are normally distributed, especially important for confidence intervals and hypothesis testing.

Checking assumptions is crucial. Common techniques include:

- Plotting residuals vs predicted values to check homoscedasticity

- Using correlation matrices to detect multicollinearity

- Histogram or Q-Q plots of residuals to check normality

- Scatter plots to verify linearity between features and target

If assumptions are violated, transformations, feature selection, or more robust models may be required.

Interpretation of Coefficients in Linear Regression

Once the Linear Regression model is trained, each feature has a corresponding coefficient (β). Understanding these coefficients is essential for interpreting how features affect the target variable.

- Intercept (β₀): Value of the target when all features are zero.

- Positive coefficient (βᵢ > 0): Increasing this feature increases the predicted target.

- Negative coefficient (βᵢ < 0): Increasing this feature decreases the predicted target.

- Magnitude: Shows the strength of the feature’s impact on the target.

Example: If predicting house price:

- β₁ (size in sq. ft.) = 50 → Every extra square foot increases price by 50 units

- β₂ (age of house) = -200 → Each year of age decreases price by 200 units

Interpretation allows businesses or analysts to understand which features matter most and in which direction they affect predictions.

In multiple regression, pay attention to multicollinearity — coefficients can be misleading if features are highly correlated.

Common Problems in Linear Regression

While Linear Regression is simple and interpretable, it can face several issues if assumptions are violated or the data has certain characteristics.

- Underfitting: Model is too simple to capture the relationship, leading to high bias.

- Overfitting: Model fits the training data too closely, failing to generalize to new data.

- Outliers: Extreme values can disproportionately influence the regression line.

- Multicollinearity: High correlation between features makes coefficient estimates unstable.

- Non-linear relationships: Linear model cannot capture complex non-linear patterns.

Detecting these problems early is crucial. Common techniques include:

- Residual plots to detect non-linearity or heteroscedasticity

- Variance Inflation Factor (VIF) to detect multicollinearity

- Leverage and Cook’s distance to detect influential outliers

- Regularization methods to reduce overfitting (discussed in the next section)

Awareness of these common problems helps ensure that Linear Regression produces reliable and interpretable results.

Regularized Linear Regression

Regularization is used to prevent overfitting in Linear Regression by penalizing large coefficients. It adds a constraint to the cost function, discouraging the model from relying too heavily on any one feature.

The two most common regularization techniques are:

- Ridge Regression (L2 Regularization): Adds the squared magnitude of coefficients to the cost function:

Cost = MSE + λ Σ βᵢ²

λ is the regularization parameter controlling the penalty. - Lasso Regression (L1 Regularization): Adds the absolute value of coefficients to the cost function:

Cost = MSE + λ Σ |βᵢ|

Lasso can shrink some coefficients to zero, effectively performing feature selection.

Key points about regularization:

- Reduces model complexity and overfitting

- Balances bias and variance

- Helps when there are many correlated or irrelevant features

- λ (lambda) needs to be tuned — usually via cross-validation

Regularized Linear Regression is especially useful in real-world datasets where overfitting is common.

Real-World Applications of Linear Regression

Linear Regression is widely used across industries due to its simplicity, interpretability, and efficiency. It helps make predictions, understand relationships, and guide business decisions.

- House Price Prediction: Predicting property prices based on features like size, location, and number of bedrooms.

- Salary Prediction: Estimating income based on experience, education, and skills.

- Sales Forecasting: Predicting product demand using historical sales, marketing spend, and seasonal factors.

- Risk Assessment: Financial institutions predicting loan defaults or insurance claims.

- Marketing Analytics: Understanding the impact of ad spend, discounts, or campaigns on customer purchases.

- Medical and Health Analytics: Predicting blood pressure, glucose levels, or disease progression from patient data.

These examples demonstrate how Linear Regression remains a practical tool for real-world data analysis, even as machine learning grows more complex.

Summary of Linear Regression

Linear Regression is a fundamental supervised learning algorithm used to model the relationship between one or more input features and a continuous output variable.

- It assumes a linear relationship between features and target.

- Model coefficients (β) determine the effect of each feature on the target.

- Learning is done by minimizing a cost function, typically Mean Squared Error (MSE).

- Optimization can be performed via Gradient Descent or the Normal Equation.

- Regularization (Ridge, Lasso) helps prevent overfitting and manage feature importance.

- Model assumptions (linearity, independence, homoscedasticity, normality, no multicollinearity) must be checked for reliable predictions.

Linear Regression remains a powerful, interpretable, and widely applied tool in machine learning, providing a solid foundation for understanding more complex models.

Key Takeaways of Linear Regression

- Linear Regression predicts a continuous target based on one or more input features.

- It assumes a linear relationship between features and target.

- Coefficients indicate the magnitude and direction of each feature’s impact.

- Residuals and Mean Squared Error measure prediction errors.

- Gradient Descent and Normal Equation are used to find optimal coefficients.

- Regularization (Ridge/Lasso) helps prevent overfitting and manage multicollinearity.

- Checking assumptions (linearity, independence, homoscedasticity, normality, no multicollinearity) ensures reliability.

- Widely applied in house price prediction, salary forecasting, sales estimation, risk modeling, and medical analytics.

- Serves as a foundation for understanding more advanced machine learning models.

About This Exercise: Linear Regression

Linear Regression is a fundamental Machine Learning algorithm used to understand and model the relationship between variables. It is often the first algorithm learners encounter because it introduces core ideas such as prediction, error minimization, and model evaluation in a simple and intuitive way.

On Solviyo, these Linear Regression exercises are designed to help you build a strong conceptual foundation while gradually introducing practical scenarios. Instead of focusing only on formulas, the exercises guide you to understand how regression models behave with real data.

Understanding Linear Regression in Machine Learning

Linear Regression attempts to model the relationship between an independent variable and a dependent variable by fitting a straight line to observed data. This line represents the best possible prediction based on the available inputs.

Through these Machine Learning exercises, you will learn how regression coefficients influence predictions and how small changes in input values affect the output. This understanding is essential before moving to more complex algorithms.

Important Concepts Covered in These Exercises

The Linear Regression MCQ exercises on Solviyo cover both theoretical and applied concepts that are commonly tested in exams and interviews.

- Simple Linear Regression and its mathematical representation

- Multiple Linear Regression with more than one feature

- Interpretation of slope, intercept, and regression coefficients

- Role of residuals and error in model performance

Model Assumptions and Evaluation

Understanding the assumptions behind linear regression is critical for building reliable Machine Learning models. These exercises help you recognize when linear regression is appropriate and when it may fail.

You will practice identifying common assumptions such as linearity, independence, and constant variance, along with evaluating model performance using error metrics like Mean Squared Error (MSE) and Root Mean Squared Error (RMSE).

Practical Applications of Linear Regression

Linear Regression is widely used in real-world Machine Learning and data science applications, including price prediction, trend analysis, demand forecasting, and risk estimation.

By solving these exercises, you will learn how theoretical concepts translate into practical decision-making, making it easier to apply linear regression to real datasets and projects.

Why Practice Linear Regression Exercises on Solviyo

Solviyo focuses on structured, practice-driven learning. Each Linear Regression exercise is carefully crafted to reinforce understanding, not rote memorization. The questions progress from basic concepts to more analytical scenarios.

These exercises also prepare you for advanced Machine Learning topics such as logistic regression, regularization techniques, and optimization algorithms like gradient descent.

Who Should Practice These Linear Regression Exercises

These exercises are ideal for students, Machine Learning beginners, and professionals preparing for technical interviews or academic assessments. If you aim to master Machine Learning step by step, Linear Regression is an essential milestone.